Cloud storage these days really allows any volume of data to be geo redundantly stored, always available and at a fraction of the price of 10 years ago. This is “a good thing”. One common problem I’ve seen is the amount of bandwidth wasted when updating existing blobs. Say you have a 10M file in cloud storage, you download and modify a small section of it, how do you update the version in cloud version?

1) Upload entire file again. Wasteful of bandwidth, time and money but sadly often the solution used since it’s the easiest option.

2) Keep some internal tracking system to determine what’s been changed and what hasn’t. Then use this information to only upload the modified part of the blob.

What follows is an enhanced version of #2 which can dramatically reduce bandwidth requirements when updating blobs in Azure Blob Storage.

For those who don’t know about Azure Block Blobs, the basic idea is that blobs (files in the cloud) are broken into a number of chunks/blocks. Each block can be uploaded/downloaded/replaced/deleted individually which in turn means manipulation of the blob can be done in chunks rather than all or nothing.(see this for more details).

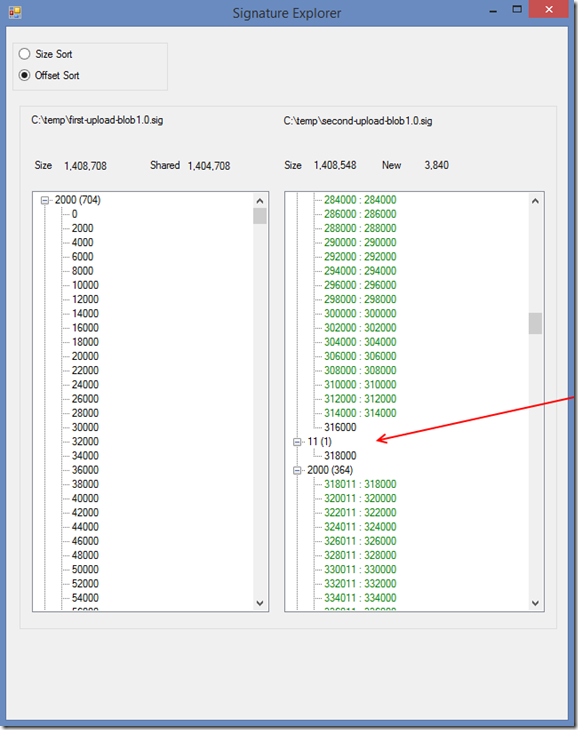

Anyway, back to the problem. Imagine you have a blob, you then download the blob, modify it and now want to upload the new version.

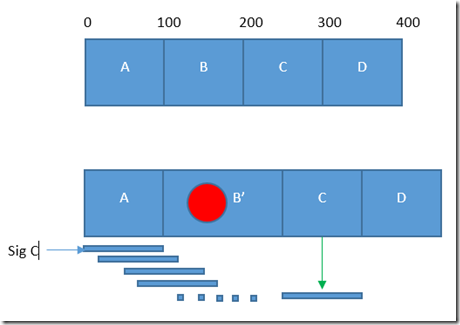

For this scenario the problem is easy. We have a blob (top row) which is made of 4×100 byte blocks. Some of the contents of the second block (between bytes 100 and 200) are replaced. The size and more importantly the offset locations of all blocks stay consistent. Determining that some of the blocks are unmodified is easy, and we simply upload the new version of the second block. Unfortunately the real world isn’t always like this. What happens when we get this situation?

In this scenario, when the “uploading program” needs to determine what blocks can be reused and what parts need to be replaced. The contents of blocks A,C and D exist in the cloud blob (top row) as well as the new version of the file (bottom row). The problem is that although contents of blocks C and D existing in the new file, their locations in the file have moved. This is the challenge, detecting that blocks in the cloud can be reused even though their location in the new blob have moved.

Now that we know the problem (data blocks are available for reuse but are in unexpected offsets) we can start searching for a solution. The approach I’ve taken is to keep some unique signatures of each block already in the cloud and then look for the same signatures (hashes) in the new version of the file which is being uploaded.

The calculations required to find the new offsets are huuuuuge, well potentially huge, well “quite large” would cover it. For each block that exists in the Azure blob we need to search at every byte offset in the new file. To put it simply, if the file is 100M in size, and we’re searching for a block that is 10M in size, then the number of comparisons required is (approx) 100 million – 10 million = 90 million.

For example:

In the above diagram, we want to determine if block C (that already exists in the cloud) also exists in the updated version of the file.

The process taken is:

0) Set offset to 0.

1) Let SizeC represent the size (in bytes) of block C.

2) Let SigC represent the unique signature of block C.

3) Read SizeC bytes (starting at offset) of the new blob into byte array.

4) Generate the signature of byte array.

5) If the new signature matches SigC then we know that when we’re uploading the new file we’re able to reuse block C!

6) If the new signature does NOT match SigC, then increment offset by 1 and jump back to step 3.

As the diagram shows, eventually we’ll end up finding block C and therefore know we do not need to upload that part of the new file. What I hope is obvious is that a LOT of signature generation needs to happen as well as lots of comparisons.

The key for the entire process to become practical is the ability to do VERY quick signature generation so the 90M calculations don’t become an issue. Enter “rolling hash signatures” (see Wikipedia for more detailed explanation). Be warned, if you Google/Bing for rolling hash, you’ll probably get some rather different results to what you were expecting. 🙂

The way rolling hash signatures are generated is essential for this process to be quick enough to be practical. There are 2 ways of generating the signature:

Firstly, you can read N bytes from a file, perform some calculation on the array of bytes and end up with your signature. Easy peasy, but “slow”.

The other option (and this is the magic) is that if you have already generated a signature for bytes 0 to 3 (for example) you can simply generate the signature for bytes 1 to 4 (ie shifting the byte array by 1) by performing a simple calculation based off the old signature.

For example:

Now, it’s not literally Sig0 – previous byte + next byte, but it’s pretty close. We’re able to calculate signatures quickly and easily, this allows us to detect common byte arrays between the new file and the existing blob.

Although I haven’t yet covered the precise algorithm used for the signature generation we now have the basic building blocks for determining what parts of an update blob actually need to be uploaded.

Steps for updating modified block based blob:

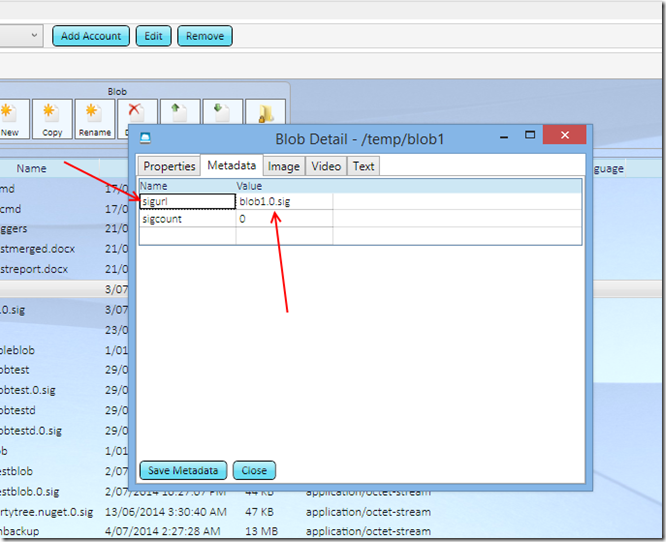

(assumption is that when blob was originally uploaded, the block signatures were also calculated and uploaded. Trivially easy thing to do)

1) Download Blob from Azure.

2) Download Block signatures from Azure.

3) Modify downloaded blob/file to hearts delight.

4) Now we need to determine which blocks that already exist in the cloud can be reused (ie we don’t need to upload that data) and which parts have been modified.

5) Loop through every block signature downloaded

5.1) Perform the rolling signature check for entire new file.

5.2) If found, make note which Azure block can be reused.

5.3) If not found, make note of which bytes are “new” and need to be uploaded.

6) You now have 2 lists; one of new bytes to upload (offset in new file) and one that contains Azure blocks that can be reused.

7) Upload the “new bytes” as their own Azure blob blocks.

8) Instruct Azure Blob Storage which blocks (new and old) can be used to construct a blob which is bitwise identical to the modified local file.

All of the above is implemented and available on Github and eventually on Nuget. The next post will cover how to practically use these libraries and what the future plans are.

btw, for anyone taking notes, yes the entire blob post could have been summarised as “RSync + Azure Block Blobs”… but thought I’d flesh it out a little 🙂