After quite a number of comments about having some consistent tools to perform basic operations on Azure Storage resources (blobs, queues and tables) I’ve decided to write up a new set of tools called “Azure Storage Tools” (man I’m original with naming, I should be in marketing).

The primary aims of AST are:

- Cross platform set of tools so there is a consistent tool across a bunch of platforms

- Be able to do all the common operations for blobs/queues/tables.

- eg. for blobs we should be able to create container, upload/download blobs, list blobs etc. You get the idea.

- Be completely easy to use, I want to be able to provide a simple tool with a simple set of parameters that commands should be fairly “guessable”

Instead of a single tool that provides blobs, queues and tables in one binary, I’ve decided to split this into 3, one for each type of resource. First cab off the rank is for blobs! The tool/binary name is astblob (again, marketing GENIUS at work!!).

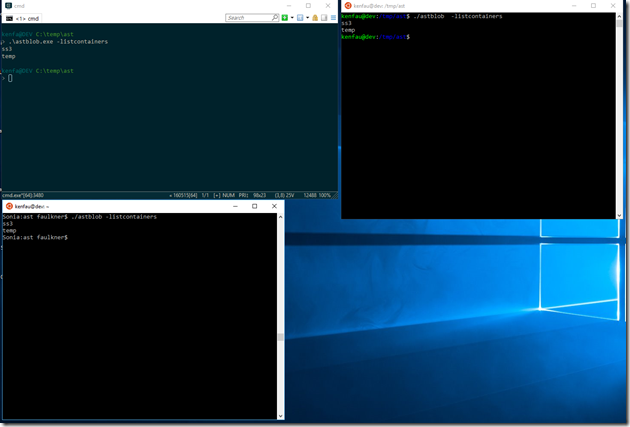

If a picture is worth a thousand words, here’s 3 thousand words worth.

Firstly, in this image we have 3 machines all running astblob, a Windows machine, Linux machine and OSX machine. Each of them are connected to the same Azure Storage container (configured through environment variables), and we’re simply asking for the containers in that Azure Storage account.

Easy enough. Now, for some more details.

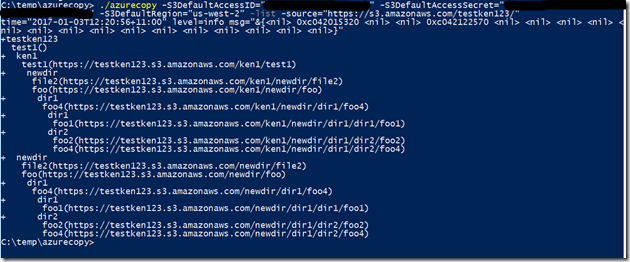

Here we’re asking for the blob contents of the “temp” container. Remember Azure (like S3) doesn’t really have the concept of directories within containers/buckets. The blobs have ‘/’ in them to “fake” directories, but really they’re just part of the blob name.

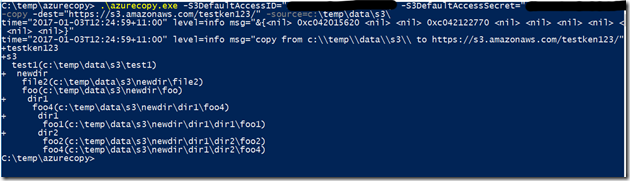

So now what happens if we download the temp container?

Here we download the temp container to some place on the local filesystem. You’ll see that the blob name that had “fake” directories in it’s name has actually had the directories made for real (executive decision made there… by FAR I believe this is what is wanted). You’ll see in both Windows and the *nix environments that that “ken1” directory was made and within them (although not shown in the screen shot) the files are contained within.

AST blob is just the first tool from the AST suite to be released. The plan is that Queue and Tables will follow shortly, also for more functionality for Blobs to be released.

The download for AST is at Github and binary releases are under the usual releases link there. Binaries are generated for Windows, OSX, Linux, FreeBSD, OpenBSD and NetBSD (all with 386 and AMD64 variants) although only Windows, Linux and OSX have been tested by me personally.

The AST tools (although 3 separate binaries) will all be self contained. The ASTBlob binary is literally a single binary, no associated libs need to be copied along with it.

Before anyone comments, yes the official Azure CLI 2 handles all of the above and more but it has more dependencies (rather than a single binary) and is also a lot more complex. AST is just aimed at simple/common tasks….

Hopefully more people will find a consistent tool across multiple platforms useful!